The early 2000s were full of excitement and curiosity about new technology. As computers, cell phones, and the internet became household essentials, many myths and misconceptions spread quickly. People believed in ideas that now seem outdated or even humorous, but at the time felt plausible. From scary warnings about radiation to assumptions about internet dangers, these tech myths shaped how we interact with devices and online spaces. They reflected a mix of fear, misinformation, and the rapid pace of innovation. Understanding these myths reminds us how far technology has come and how our relationship with it has evolved over the years. Here are 16 tech myths that many people actually believed in the 2000s.

1. Cell Phones Cause Brain Cancer

Many people believed that holding a cell phone to their head could cause brain cancer due to radiation. Studies at the time were inconclusive and often misunderstood by the public. Health organizations have since clarified that mobile phones emit non-ionizing radiation, which is far less harmful than early warnings suggested. Despite the myths, decades of research show no definitive link between cell phone use and brain cancer. Fear of radiation led some people to buy special phone cases or use headsets constantly. This myth highlights how uncertainty about new technology can spread widely and influence behavior in unexpected ways.

2. Computers Could Catch Viruses Like Humans

In the 2000s, many people thought computers could catch viruses in the same way humans do. This misunderstanding led to the belief that simple exposure to infected floppy disks or email attachments could make a computer sick. In reality, computer viruses are malicious programs created by humans to exploit software vulnerabilities. Antivirus software emerged as a critical tool to detect and remove these programs. The myth reflected a natural way people tried to relate technology to familiar concepts like human illness. Today, we understand that protecting computers requires updates and security measures rather than fear of invisible pathogens.

3. More Megapixels Mean Better Camera Quality

Consumers believed that the higher the megapixels, the better the camera quality. While megapixels determine image resolution, other factors like lens quality, sensor size, and lighting are more important for clear photos. This misconception influenced early digital camera marketing and smartphone sales. Many people purchased devices expecting perfect photos based solely on megapixel numbers. Over time, photography enthusiasts and professionals emphasized that overall optics and software processing matter more than raw megapixels. Understanding this myth helps explain the confusion during the early digital photography boom and why modern cameras balance multiple features for superior image quality.

4. Closing Programs Speeds Up Your Computer

A common belief was that closing programs always made computers faster. While freeing memory can help performance, many programs in the 2000s were designed to run in the background efficiently. Closing certain processes unnecessarily could disrupt system functions or slow multitasking. Operating systems manage memory and processing in ways users might not have understood at the time. Misunderstanding this led to repeated unnecessary closures, which sometimes caused frustration or lost work. Today, users are encouraged to trust system management tools and understand that closing programs is not always the best solution for performance issues.

5. Macs Cannot Get Viruses

Many people thought Apple computers were completely immune to viruses. This belief made Mac users less cautious about security, assuming their systems were invincible. In reality, Macs can be vulnerable to malware and phishing attacks, though the smaller market share made them less targeted compared to Windows PCs. The myth contributed to overconfidence in early Apple devices and delayed some users from adopting antivirus software. Awareness has grown over the years, emphasizing that all devices require proper security practices. Understanding this misconception highlights how brand perception can influence behavior and the importance of vigilance even with trusted systems.

6. More Bars Mean Better Cell Reception

It was commonly assumed that more bars on a cell phone meant perfect signal strength everywhere. While bars indicate signal reception, they do not always reflect call quality or data speed accurately. Environmental factors, tower congestion, and device design affect performance beyond the simple bar display. People often changed locations unnecessarily or worried when bars fluctuated, thinking service was unreliable. Modern networks provide a more nuanced understanding of reception quality, including data speed and connection stability. This myth demonstrates how simple visual indicators can be misinterpreted, creating misconceptions about technology reliability and user experience.

7. Charging Overnight Destroys Your Battery

Many believed that leaving devices plugged in overnight would ruin the battery. Early battery technology was less sophisticated, but modern lithium-ion batteries have built-in safeguards to prevent overcharging. The myth caused unnecessary anxiety and led users to unplug devices obsessively. Manufacturers now recommend leaving devices plugged in as needed without fear of damage. This misconception illustrates how fear of technology can spread before consumers understand engineering advancements. Proper battery maintenance involves avoiding extreme temperatures and excessive cycles rather than worrying about overnight charging. Understanding the myth clarifies why users were cautious during the early smartphone era.

8. Pop-Ups Could Hack Your Computer Instantly

In the 2000s, pop-up ads were feared as potential instant threats capable of hacking computers. While some malicious pop-ups contained harmful scripts, the majority were simply annoying advertisements. The myth grew because users did not understand safe browsing practices or how web security worked. Internet safety education eventually clarified that closing pop-ups or using ad blockers mitigates risk. This myth emphasizes how visible and disruptive technology features can create exaggerated fears. Understanding how threats actually work helps users approach technology with knowledge rather than panic, making browsing safer and less stressful.

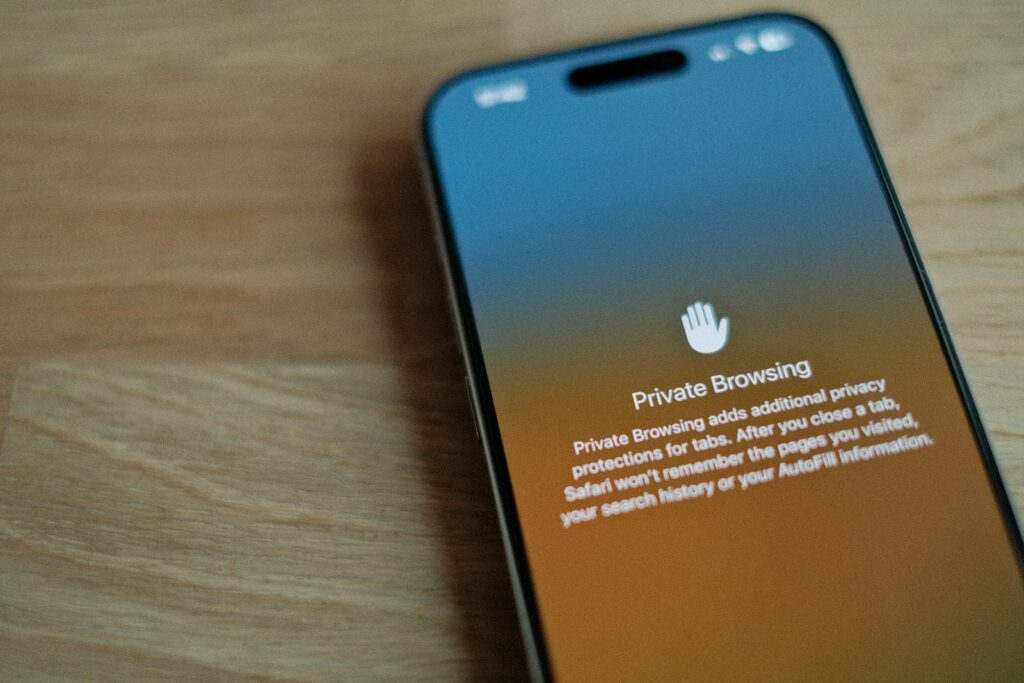

9. Private Browsing Makes You Invisible Online

Many believed that using private or incognito browsing completely hid online activity. In reality, this mode prevents local device history storage but does not conceal activity from websites, internet service providers, or network administrators. Misunderstanding this led users to think they were anonymous, sometimes sharing sensitive information carelessly. Modern privacy tools and VPNs are required for more comprehensive protection. This myth illustrates how simple interface features can create a false sense of security, highlighting the importance of understanding how technology actually works before relying on it for privacy.

10. Deleting Files Permanently Removes Them

It was assumed that deleting a file completely erased it from a computer. In reality, deleted files often remained recoverable from storage until overwritten. This misconception misled users into thinking sensitive information was gone when it could still be accessed. Data security practices like secure wiping or encryption are necessary to ensure permanent deletion. This myth shows how early technology users misunderstood digital storage processes. Awareness of actual file deletion practices has improved with modern operating systems, helping people protect their data effectively and preventing accidental exposure of important information.

11. Internet Explorer Was the Only Browser Worth Using

Many people thought Internet Explorer was the standard for safe and reliable browsing. Other browsers existed but were less popular, leading users to assume IE was the only choice. This misconception limited exposure to alternative browsers with better speed, security, and features. Marketing and default installations reinforced this perception. Eventually, the rise of Chrome, Firefox, and others demonstrated the benefits of choice. This myth highlights how dominant technology products can create assumptions about necessity and quality, shaping user habits and limiting exploration of potentially superior options.

12. Viruses Spread Through Email Only

People believed that email attachments were the only way viruses could infect computers. While email was a major vector, viruses could spread through downloads, websites, and removable media as well. This myth caused users to overlook other security risks and rely solely on email caution. Understanding multiple attack vectors became essential for digital safety. Awareness campaigns and antivirus programs helped correct this misconception, emphasizing comprehensive protection strategies. Recognizing that threats come from multiple sources teaches the importance of proactive security and understanding that no single preventive measure can completely protect against malware.

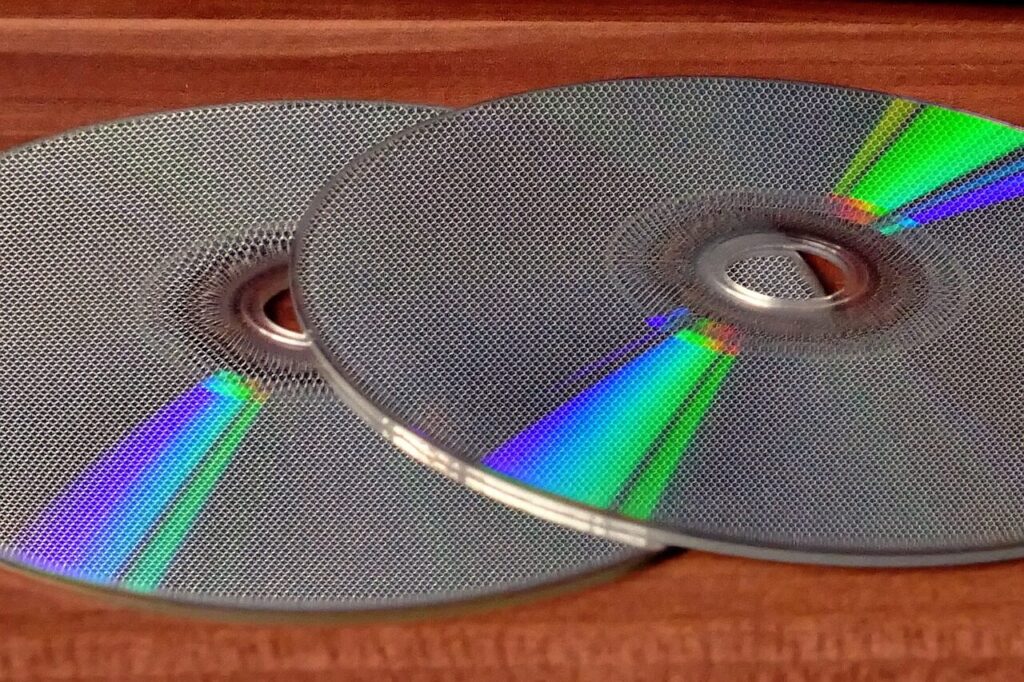

13. AOL CDs Could Hack Your Computer

Many received AOL CDs in the mail and joked about installing the internet instantly, believing they could somehow hack or take over a computer. These myths played on curiosity and a lack of understanding about software installation. The discs contained legitimate installation programs, not harmful code, yet urban legends persisted. The myth reflects how unfamiliar technology can be sensationalized, creating false fears. Today, installation is mostly digital, but these early experiences shaped perceptions of software safety and made users more cautious about unknown media sources. Understanding the myth reminds us how novelty and unfamiliarity can fuel misconceptions.

14. Your Computer Needed Constant Defragmentation

It was widely believed that computers needed daily defragmentation to work properly. While defragmenting a hard drive occasionally improved performance, constant defragmentation was unnecessary and sometimes counterproductive. Modern systems and solid-state drives handle data differently, making the practice mostly obsolete. The myth caused many to worry unnecessarily about their computer’s health. This belief illustrates how early optimization advice was often misunderstood or overgeneralized. Learning how technology actually functions allows users to maintain performance efficiently without excessive intervention, reducing stress and saving time while improving the computing experience.

15. Burning CDs Was Dangerous

Some people feared that burning CDs would destroy their computer or data. Early CD burners were new and unfamiliar, and software errors or hardware issues were often misattributed to user mistakes. In reality, burning a CD is a simple and safe process when following instructions. The myth caused hesitation among users eager to share files or make backups. Over time, confidence grew as people gained experience. This misconception highlights how new technology often triggers fear until familiarity and education provide reassurance, demonstrating the importance of guidance and practice in adopting innovations safely.

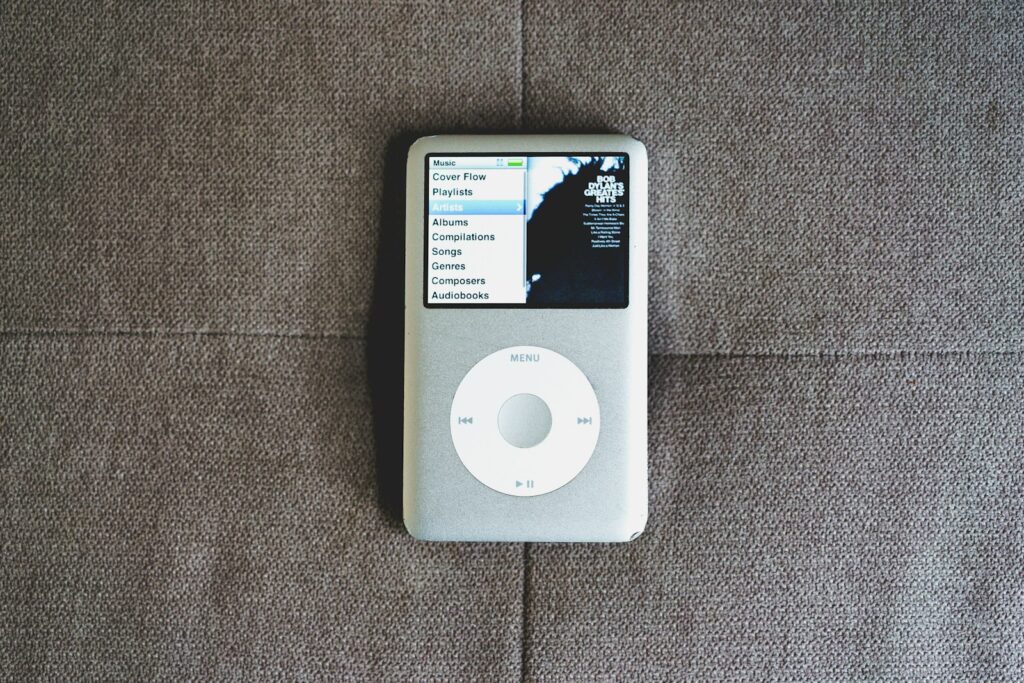

16. MP3s Would Permanently Damage Music Devices

When MP3 players first became popular, many believed that downloading or playing files from the internet could permanently damage devices. Concerns included corrupted files, viruses, or incompatible formats. In reality, MP3 players were designed to handle standard files safely, and risks were minimal when using trusted sources. This myth reflects the anxiety surrounding new technology and digital media. Over time, as users became more comfortable with file formats and device capabilities, these fears diminished. Understanding this myth shows how unfamiliar technology can create exaggerated warnings and how education and experience help users adopt innovations confidently.

Comments